I've blogged about lie detection before, and that (spoiler alert) people are really bad at it. Markowitz and Hancock used a computer to do their research, which allowed them to perform powerful analyses on their data, looking for linguistic patterns that separated retracted papers from unretracted papers. For instance, retracted papers contained, on average, 60 more "jargon-like" words than unretracted papers.

Full disclosure: I have not read the original paper, so I do not know what terms they specifically defined as jargon. While a computer can overcome the shortcomings of a person in terms of lie detection (though see blog post above for a little bit about that), jargon must be defined by a person. You see, jargon is in the eye of the beholder.

For instance - and forgive me, readers, I'm about to get purposefully jargon-y - my area of expertise is a field called psychometrics, which deals with measuring concepts in people. Those measures can be done in a variety of ways: self-administered, interview, observational, etc. We create the measure, go through multiple iterations to test and improve it, then pilot it in a group of people, and analyze the results, then fit it to a model to see if it's functioning the way a good measure should. (I'm oversimplifying here. Watch out, because I'm about to get more complicated.)

My preferred psychometric measurement model is Rasch, which is a logarithmic model that transforms ordinal scales into interval scales of measurement. Some of the assumptions of Rasch are that items are unidimensional and step difficulty thresholds progress monotonically, with thresholds of at least 1.4 logits and no more than 5.0 logits. Item point-measure correlations should be non-zero and positive and item and person OUTFIT mean-squares should be less than 2.0. A non-significant log-likelihood chi-square shows good fit between the data and the Rasch model.

Depending on your background or training, that above paragraph could be: ridiculously complicated, mildly annoying, a good review, etc. My point is that in some cases jargon is unavoidable. Sure, there is another way of saying unidimensional - it means that a measure only assesses (measures) one concept (like math ability, not say, math and reading ability) - but, at the same time, we have these terms for a reason.

Several years ago, I met my favorite author, Chuck Palahniuk at a Barnes and Noble at Old Orchard - which coincidentally was the reading that got him banned from Barnes and Nobles (I should blog about that some time). He took questions from the audience, and I asked him why he used so much medical jargon in his books. He told me he did so because it lends credibility to his writing, which seems to tell the opposite story of Markowitz and Hancock's findings above.

That being said, while jargon may not necessarily mean a person is being untruthful, it can still be used as a shield in a way. It can separate a person from unknowledgeable others he or she may deem unworthy of such information (or at least, unworthy of the time it would take to explain it). Jargon can also make something seem untrustworthy and untruthful, if it makes it more difficult to understand. We call these fluency effects, something else I've blogged about before.

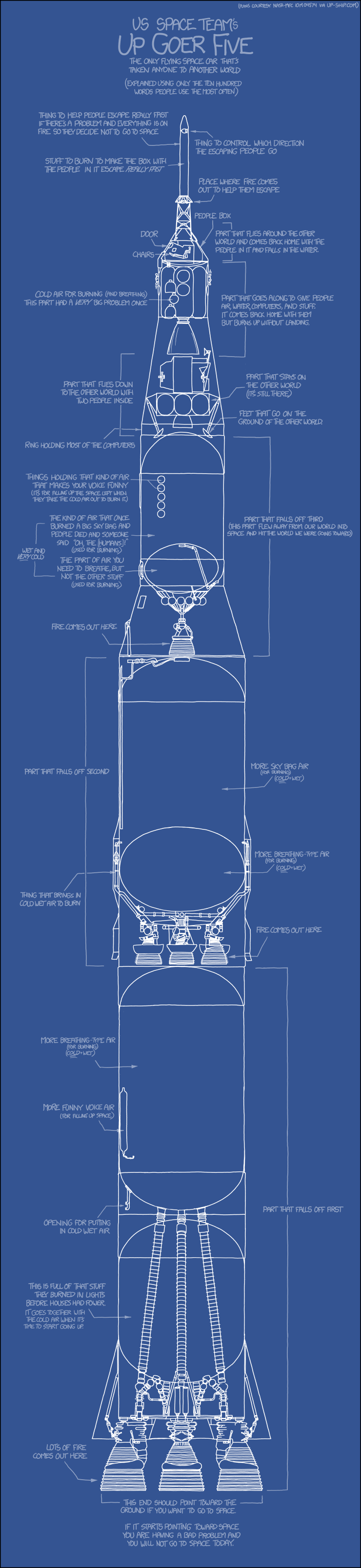

So where is the happy medium here? We have technical terms for a reason, and we should use them as appropriate. But sometimes, they might not be appropriate. As I tried to demonstrate above, it depends on audience. And on that note, I leave you with this graphic from XKCD, which describes the Saturn V rocket using common language (thanks to David over at The Daily Parker for sharing!).

Simplistically yours,

~Sara

No comments:

Post a Comment